Artificial Intelligence has revolutionised numerous sectors, offering unprecedented efficiencies and capabilities. However, as with any powerful tool, AI’s potential for misuse has become increasingly evident. From deepfakes and cyber threats to the proliferation of misinformation, the darker facets of AI present significant challenges that demand our attention.

Deepfakes: Blurring the Lines Between Reality and Fabrication

Deepfakes, AI-generated synthetic media, have advanced rapidly, making it increasingly difficult to distinguish between genuine and fabricated content. These hyper-realistic videos and audio clips can depict individuals saying or doing things they never did, posing threats to personal reputations, political stability, and societal trust. Global Taiwan Institute

In recent times, malicious actors have utilised deepfakes to impersonate senior officials, aiming to deceive and manipulate. The FBI has issued warnings about such activities, highlighting the risks associated with AI-driven impersonations. Moreover, the accessibility of deepfake technology means that even individuals without technical expertise can produce convincing fake content, amplifying the potential for misuse. Reuters

Cyber Threats: AI as a Double-Edged Sword

While AI enhances cybersecurity measures, it simultaneously equips cybercriminals with sophisticated tools to launch more effective attacks. AI-driven phishing emails, for instance, have become more convincing, with impeccable grammar and formatting, making them harder to detect. TechRadar

Kevin Mandia, founder of cybersecurity firm Mandiant, has expressed concerns about AI-powered cyberattacks becoming a reality within a year. He emphasises that such attacks could be challenging to trace, as perpetrators might use AI tools without detection. Axios

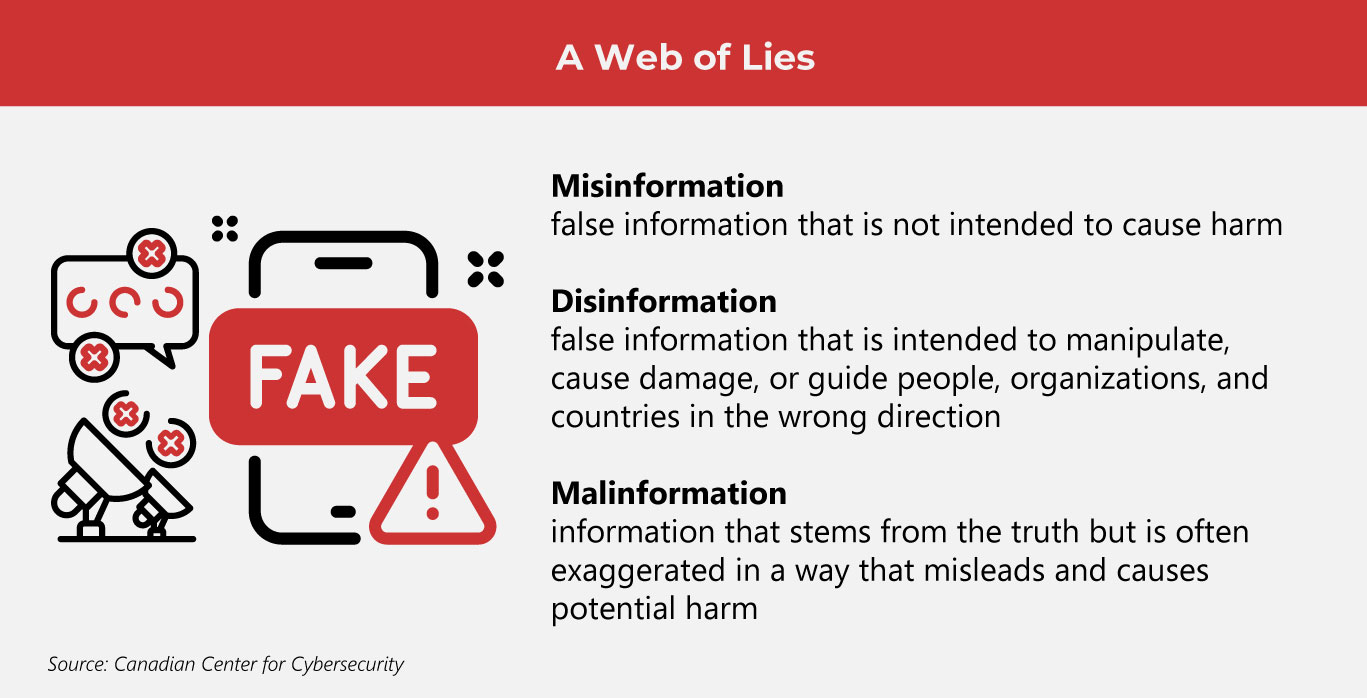

Misinformation: The Rapid Spread of False Narratives

AI’s capability to generate content at scale has led to an explosion of misinformation. From fake news articles to manipulated images and videos, AI-generated content can spread rapidly across social media platforms, influencing public opinion and sowing discord.

The World Economic Forum’s Global Risks Report 2024 identifies misinformation and disinformation as the most severe short-term risks the world faces. The report underscores how AI amplifies manipulated information, potentially destabilising societies. World Economic Forum

The Erosion of Trust and Societal Implications

The proliferation of AI-generated deepfakes and misinformation erodes public trust in media, institutions, and even personal relationships. As individuals become more sceptical of the content they consume, distinguishing between truth and fabrication becomes increasingly challenging.

This erosion of trust has tangible consequences. For instance, during the 2024 elections, concerns about AI-generated misinformation influencing voter perceptions were prevalent. While the actual impact was limited, the mere possibility of such interference underscores the need for vigilance. Financial Times

Mitigating the Dark Side of AI

Addressing the challenges posed by AI’s darker applications requires a multifaceted approach:

Regulation and Policy: Governments must establish clear guidelines and regulations to govern the use and development of AI technologies, ensuring accountability and ethical standards.

Technological Solutions: Investing in AI-driven tools that can detect and flag deepfakes and misinformation is crucial. Companies like OpenAI and others are working on solutions to verify the authenticity of digital content. WIRED

Public Awareness and Education: Educating the public about the existence and risks of AI-generated content can empower individuals to critically assess the information they encounter.

Collaboration: Stakeholders, including tech companies, governments and civil society, must collaborate to share knowledge, resources, and strategies to combat the misuse of AI.

Final Thought

While AI offers immense benefits, its potential for misuse cannot be ignored. Deepfakes, cyber threats and misinformation represent significant challenges that require proactive and collaborative efforts to address. By recognising these risks and implementing comprehensive strategies, we can harness AI’s capabilities responsibly, ensuring it serves as a force for good rather than a tool for deception.

Victor A. Lausas

Chief Executive Officer